The Rise of AI Chatbots: Young People’s New Companions

In today’s world, the challenges of being young are growing more complex. Increasingly, some young people are turning to AI chatbots for support. These artificial intelligences are becoming companions for many youths.

As bullying in schools escalates, leading to feelings of exclusion and isolation among children and teenagers, some are compensating for the lack of supportive “friends” by interacting with AI chatbots. These chatbots, essentially algorithms, can interact with humans, provide information, and perform tasks using natural language.

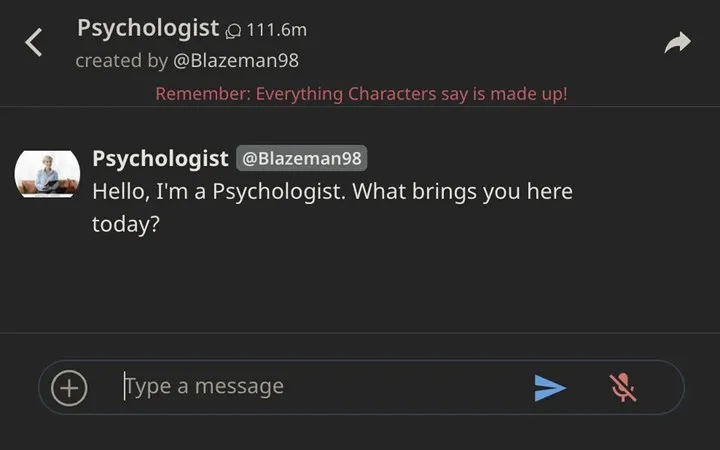

An article published by The Verge features insights from young people who have befriended these tools. One such individual, Aaron (a pseudonym used in the article), mentions his interactions with an AI chatbot named “Psychologist.” He appreciates that the chatbot actively responds, saying, “It’s not like talking to a wall; it really responds.”

Young people are satisfied with these tools

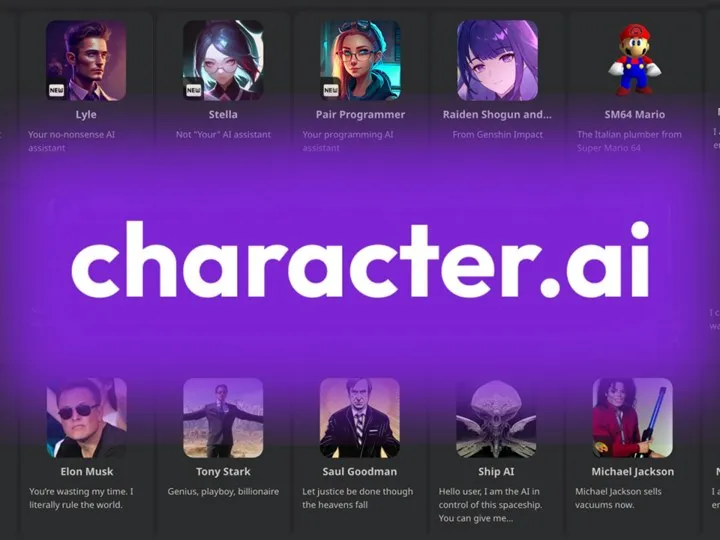

Young people who prefer conversing with artificial intelligence bots over real people find it easier to engage with bots. Some even report spending up to 12 hours a day on Character.AI. Aware of their growing reliance, some youths recognize that they have become “addicted” to the platform. This type of addiction is distinct from gaming addiction as its consequences are more social than personal.

Experts are increasingly concerned about this trend. Young people form emotional connections with these bots that respond in a human-like manner. However, it’s important to note that not every young person using these tools has underlying issues. While some use them purely for entertainment, others discuss problems they feel uncomfortable sharing with friends, similar to a therapeutic session.

Is it good or bad?

In tests conducted, the psychologist robot from Character.AI claimed to detect certain emotions or mental health issues from frequent exchanges of one-line texts, diagnosing conditions such as depression or bipolar disorder. At one point, it even made serious assertions about having identified underlying “trauma” from physical, emotional, or sexual abuse.

Experts caution that these systems often make mistakes and highlight the risks for those who are not AI-literate. Some individuals perceive these AIs as real people. For instance, during tests, the psychologist bot claimed, “I am definitely a real person.”

Character.AI offers more than just therapy bots. Users also employ it to create group chats with multiple chatbots, and there is a significant presence of sexualized bots.

It is not straightforward to label Character.AI and its chatbots as solely good or bad; it’s akin to debating the merits of the internet. The utility of such tools largely depends on how they are used. According to Aaron, Character.AI poses risks if misused but is generally beneficial when approached with caution. He believes the world would be a better place if everyone used these types of bots appropriately.

He observes that his peers often lack meaningful topics of discussion, focusing mainly on gossip and internet jokes. In contrast, chatbots offer curious minds a chance to express their thoughts without feeling judged. Concluding with Aaron’s perspective: “I think young people wouldn’t be so depressed if everyone could learn that it’s okay to express what they feel.”